We are at a tipping point in the data economy. In the EU alone, it accounts for almost 3.6% of the European Union GDP and is on its way to crossing the trillion-euro threshold by 2030. From people to technologies, and from organisations to processes, companies need a robust data strategy that can favour innovations that meet their maturity status and aspirations while rejecting others. Data innovation has historically been nurtured through an ecosystem of specialists, but now we see a wave of outside-in innovation where best practices are inherited from other disciplines, from financial management to product development and DevOps. In this article, we will go across 5 trends that could help you bring your data strategy to the next level.

Data as a product and the data product manager

Data and analysts’ team have shown a strong ability to innovate and spark data initiatives. Using “fail fast and learn faster” approaches, they created data labs, mobilized tiger teams on proof of concepts, and demonstrated the benefits of data initiatives in a matter of weeks based on the company’s pain points. But then they struggled to establish a sustainable operating model for data and analytics while laying the ground for continuous improvement.

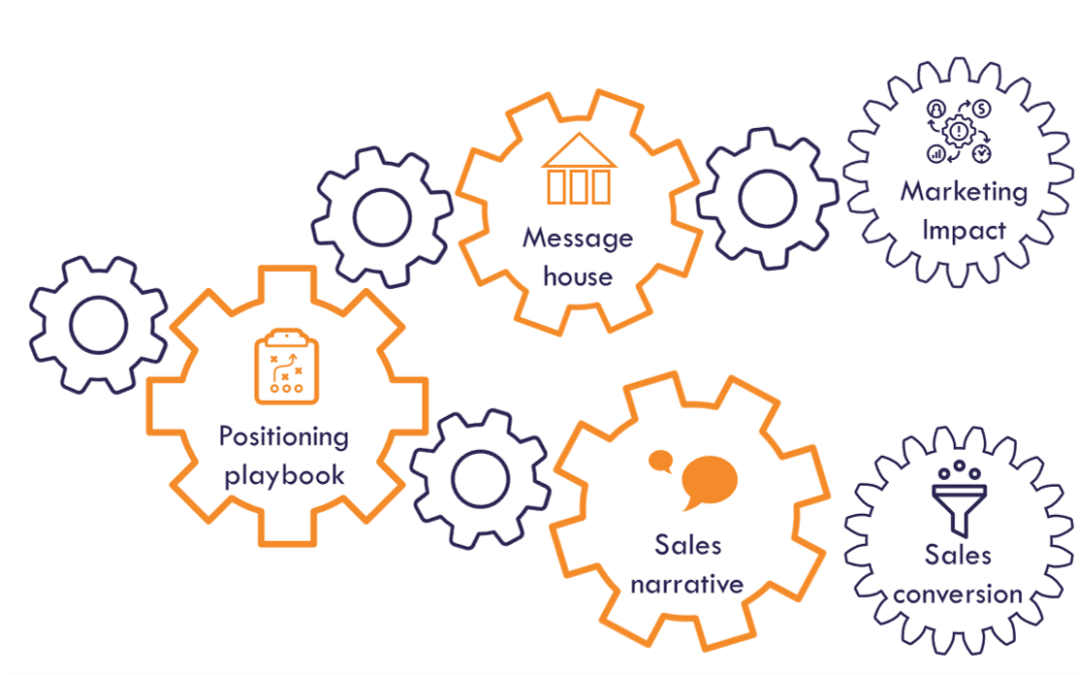

Although considering data as a product rather than as a project is not a new idea, its time has come to cross the data maturity tipping point. Applying product thinking to data has two major implications. The first one relates to consumption: a data product should be designed – and promoted – to be easily consumed by a diverse set of users across diverse use cases. Its go-to-market and overall life cycle should be explicitly managed, with an ongoing roadmap driven by usage requirements, regular releases, and features (that can be deprecated at some point), together with observability and feedback on how data is effectively consumed.

The second implication relates to productization. Applying product thinking raises the bar on how the product is managed over time. It requires formalized service level agreement and continuous testing and monitoring to monitor usage, performance, and quality.

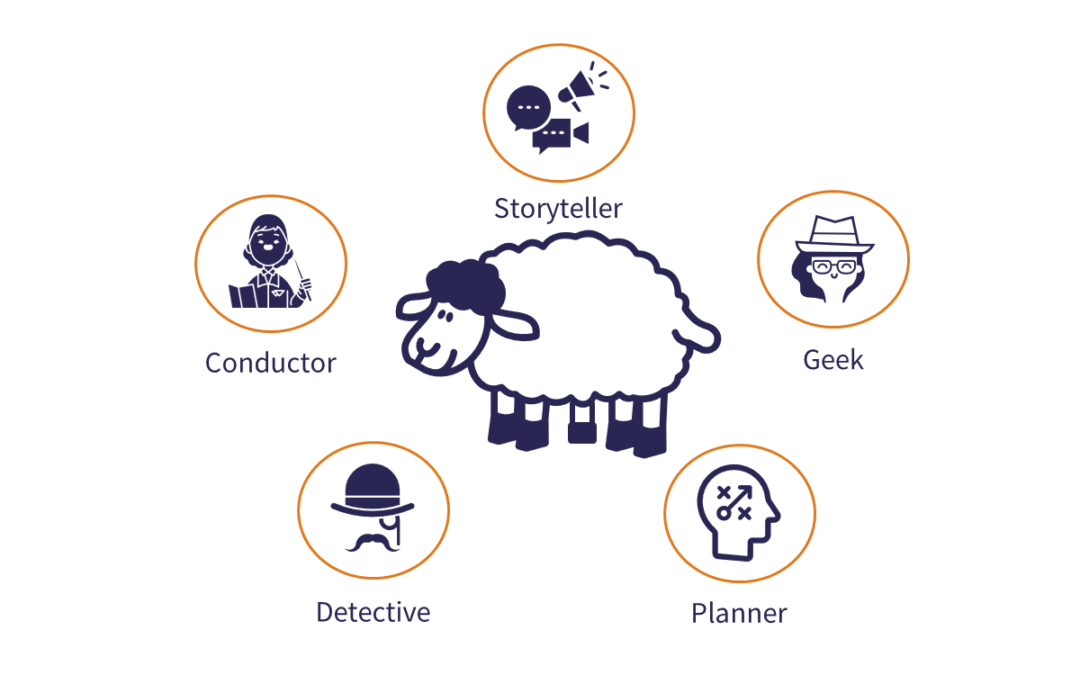

This brings a new duty for the data teams, but the good news is that this discipline can be sourced from well-established product management and product marketing approaches. Welcome to the data-product manager as the new kid on the block in your data team besides data scientists, engineers, analysts, architects and stewards.

Data valuation and data monetization

In its latest CDO survey, PWC highlights a rise of Chief Data Officers, especially in Europe where 41.6% of public companies have appointed a CDO (compared to 25.7% in 2021) and also pinpoints that “the presence of a CDO is correlated with stronger performance than that achieved by organizations without a dedicated executive-level data role”.

Even though the value of data is now recognized at the board level, companies are still struggling to define its economic impact at a finer grainer, as data is an intangible asset. This puts the CDO and the data teams into an uncomfortable position as they ramp up new data efforts with growing data costs, while businesses are showing greater impatience for tangible results.

A good resolution for data teams is to initiate and benchmark their data initiatives with an outcome-based approach, using data valuation approaches for assessment and prioritization. Initiated nearly two decades ago, data valuation is still a nascent discipline, but methodologies inherited from finance management are coming into play and are increasingly documented across books, academic research papers, or white papers. Interestingly, data valuation (and monetization) approaches go hand in hand with data as product principles, as stated by Doug Laney in a recent article series.

Data minimisation and fitness

Data minimisation is a principle that came with data privacy, in the context of the Privacy by design framework. It is explicitly expressed in GDPR which states that personal data must be “adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed”. Other regulations, such as the Californian privacy regulation (CPRA), also establish data minimization principles.

As a principle that came as a regulation mandate while data teams were busy accelerating their big data efforts, the data minimization principles initially sound counterintuitive if not controversial. But many organizations now realize that they must do a better job of understanding what data will create the most value for their business and ensure it is fit for purpose. Consider data minimization principles beyond data privacy, as a best practice to address information overload, and prioritize data management and data governance efforts towards the data that matters the most. It will also help data teams do a better job of aligning their initiatives with sustainability objectives. Mathieu Llorens, CEO of AT Internet, wrote a fascinating article on this “data ecology” idea back in 2019.

So, what if data fitness became a guiding principle across your data initiatives?

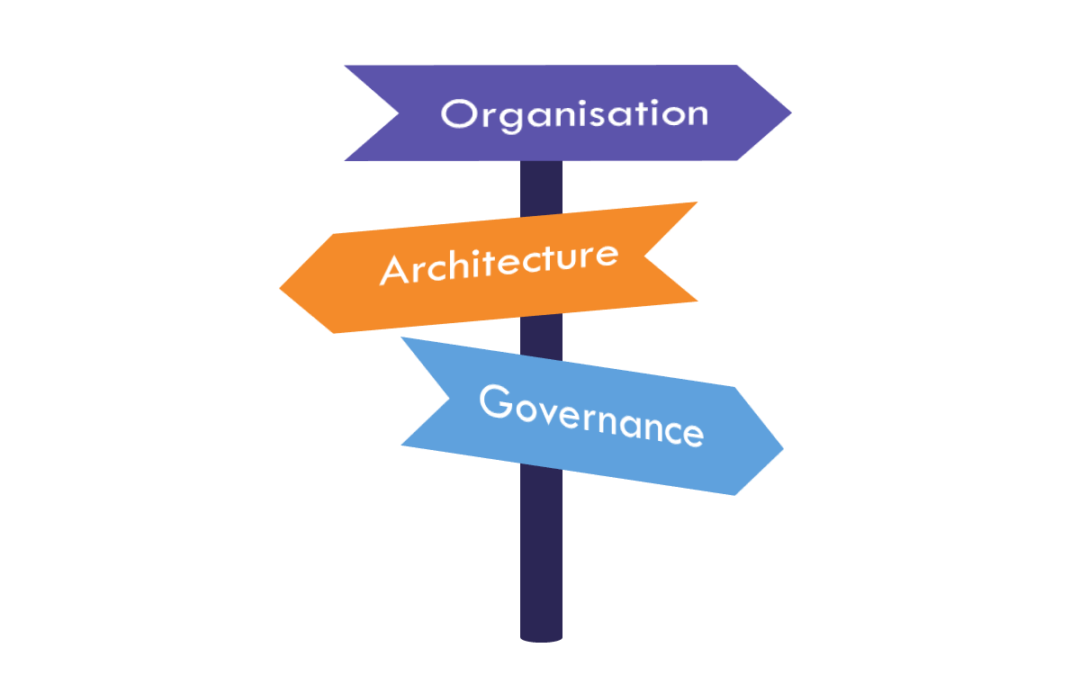

Data Organisations, and the balancing act between centralized and decentralized

While data elevated to the board level and gained highly growing and substantial investments, its operating model towards scalability has mainly been addressed through organizational centralization. Through central data offices or centres of excellence, organizations could reach the critical mass, develop talents, set the standards, and become a catalyst to develop a data culture by embedding business stakeholders into their squads along multiple data initiatives.

But at some point, the central organization becomes a bottleneck and is being challenged by the business units on their ability to understand their business requirements and achieve high responsiveness. This drives towards decentralized ownership of the data initiatives, where distinct data organizations are created for each business domain, embedding the data specialists into their teams rather than the opposite.

Hence the popularity of the data mesh principles. But beware of the big bang, this is all about people and change management, rather than another technological shift. In addition, centralisation vs decentralization is a balancing act and an incremental, ongoing journey rather than a movement towards a steady state. Beyond the data mesh hype, best practices for designing hybrid organizational approaches that can balance agility and governance are being documented, such as this inspiring series on data & analytics operating model by Wayne Eckerson, or those lessons learned from Blablacar’s data mesh journey.

Data observability, or when data governance meets DataOps

The data supply chain is complex and heterogeneous, from source system to pipelines, transient to final storage, and packaged or ad-hoc consumption. In addition, data changes are ongoing, from a content and structure perspective. This raises serious quality issues, to the point that data quality stands out in the top 3 challenges for data initiatives for years. To bridge this trust gap, some organizations created dedicated data governance teams with operational roles for data curation, monitoring and quality control. But the risk of such an approach is to isolate the responsibility of solving the wide data quality challenge to a small number of data stewards, putting them in a difficult position while disengaging all other stakeholders to collaborate on fixing the issue.

The time has come for DataOps teams to take broader responsibility for data health. Through service level agreements, continuous testing, and application monitoring, DataOps can level up their quality standards while specifying upfront what good data looks like, productize the way they monitor performance and quality issues and act upon them before data can be consumed. Observability changed the way software quality and performance are monitored using telemetry, and is now emerging as a promising new discipline for data.

Beyond the hype, this is an opportunity for data teams to eat their dog food by being data-driven. What if data health became a specific data domain in your data initiatives, improving your ability to fully understand and report on data health across your systems?

As data observability is all about automation, it requires the use of advanced tools that can capture metadata, data lineage, and telemetry data. This market is in its early days, and with this respect, good resolutions should also apply for software vendors: while many data software and platform providers innovated through metadata models that could embed rich business meaning and data relationships, they did it through proprietary models, which resulted in data silos. Ultimately, this didn’t help to provide transparency and data observability across the data supply chain. Interestingly, a handful of renowned data-driven companies tackled this issue by developing their tools and then sharing them as open source: Uber with OpenMetadata, Lyft with Amundsen, Netflix with Metacat, and Linkedin with DataHub. Although none of those projects has reached wide industry adoption, this clearly highlights the need to make metadata as easy to crawl and accessible as it is in the web landscape.